It also adds 2 sample of devstack's local.conf file for deploying Neutron with OVN mechanism driver. Needed to create PNG files out of the existing SVG ones in order to pass the pdf doc build. Co-Authored-By: Aaron Rosen <aaronorosen@gmail.com> Co-Authored-By: Akihiro Motoki <amotoki@gmail.com> Co-Authored-By: Amitabha Biswas <abiswas@us.ibm.com> Co-Authored-By: Andreas Jaeger <aj@suse.com> Co-Authored-By: Anh Tran <anhtt@vn.fujitsu.com> Co-Authored-By: Assaf Muller <amuller@redhat.com> Co-Authored-By: Babu Shanmugam <bschanmu@redhat.com> Co-Authored-By: Brian Haley <bhaley@redhat.com> Co-Authored-By: Chandra S Vejendla <csvejend@us.ibm.com> Co-Authored-By: Daniel Alvarez <dalvarez@redhat.com> Co-Authored-By: Dong Jun <dongj@dtdream.com> Co-Authored-By: Emilien Macchi <emilien@redhat.com> Co-Authored-By: Flavio Fernandes <flavio@flaviof.com> Co-Authored-By: Gal Sagie <gal.sagie@huawei.com> Co-Authored-By: Gary Kotton <gkotton@vmware.com> Co-Authored-By: Guoshuai Li <ligs@dtdream.com> Co-Authored-By: Han Zhou <zhouhan@gmail.com> Co-Authored-By: Hong Hui Xiao <xiaohhui@cn.ibm.com> Co-Authored-By: Jakub Libosvar <libosvar@redhat.com> Co-Authored-By: Jeff Feng <jianhua@us.ibm.com> Co-Authored-By: Jenkins <jenkins@review.openstack.org> Co-Authored-By: Jonathan Herlin <jonte@jherlin.se> Co-Authored-By: Kyle Mestery <mestery@mestery.com> Co-Authored-By: Le Hou <houl7@chinaunicom.cn> Co-Authored-By: Lucas Alvares Gomes <lucasagomes@gmail.com> Co-Authored-By: Matthew Kassawara <mkassawara@gmail.com> Co-Authored-By: Miguel Angel Ajo <majopela@redhat.com> Co-Authored-By: Murali Rangachari <muralirdev@gmail.com> Co-Authored-By: Numan Siddique <nusiddiq@redhat.com> Co-Authored-By: Reedip <rbanerje@redhat.com> Co-Authored-By: Richard Theis <rtheis@us.ibm.com> Co-Authored-By: Russell Bryant <rbryant@redhat.com> Co-Authored-By: Ryan Moats <rmoats@us.ibm.com> Co-Authored-By: Simon Pasquier <spasquier@mirantis.com> Co-Authored-By: Terry Wilson <twilson@redhat.com> Co-Authored-By: Tong Li <litong01@us.ibm.com> Co-Authored-By: Yunxiang Tao <taoyunxiang@cmss.chinamobile.com> Co-Authored-By: Yushiro FURUKAWA <y.furukawa_2@jp.fujitsu.com> Co-Authored-By: chen-li <shchenli@cn.ibm.com> Co-Authored-By: gong yong sheng <gong.yongsheng@99cloud.net> Co-Authored-By: lidong <lidongbj@inspur.com> Co-Authored-By: lzklibj <lzklibj@cn.ibm.com> Co-Authored-By: melissaml <ma.lei@99cloud.net> Co-Authored-By: pengyuesheng <pengyuesheng@gohighsec.com> Co-Authored-By: reedip <rbanerje@redhat.com> Co-Authored-By: venkata anil <anilvenkata@redhat.com> Co-Authored-By: xurong00037997 <xu.rong@zte.com.cn> Co-Authored-By: zhangdebo <zhangdebo@inspur.com> Co-Authored-By: zhangyanxian <zhang.yanxian@zte.com.cn> Co-Authored-By: zhangyanxian <zhangyanxianmail@163.com> Change-Id: Ia121ec5146c1d35b3282e44fd1eb98932939ea8c Partially-Implements: blueprint neutron-ovn-merge

10 KiB

TripleO/RDO based deployments

TripleO is a project aimed at installing, upgrading and operating OpenStack clouds using OpenStack's own cloud facilities as the foundation.

RDO is the OpenStack distribution that runs on top of CentOS, and can be deployed via TripleO.

TripleO Quickstart is an easy way to try out TripleO in a libvirt virtualized environment.

In this document we will stick to the details of installing a 3 controller + 1 compute in high availability through TripleO Quickstart, but the non-quickstart details in this document also work with TripleO.

Note

This deployment requires 32GB for the VMs, so your host may have >32GB of RAM at least. If you have 32GB I recommend to trim down the compute node memory in "config/nodes/3ctlr_1comp.yml" to 2GB and controller nodes to 5GB.

Deployment steps

Download the quickstart.sh script with curl:

$ curl -O https://raw.githubusercontent.com/openstack/tripleo-quickstart/master/quickstart.shInstall the necessary dependencies by running:

$ bash quickstart.sh --install-depsClone the tripleo-quickstart and neutron repositories:

$ git clone https://opendev.org/openstack/tripleo-quickstart $ git clone https://opendev.org/openstack/neutronOnce you're done, run quickstart as follows (3 controller HA + 1 compute):

# Exporting the tags is a workaround until the bug # https://bugs.launchpad.net/tripleo/+bug/1737602 is resolved $ export ansible_tags="untagged,provision,environment,libvirt,\ undercloud-scripts,undercloud-inventory,overcloud-scripts,\ undercloud-setup,undercloud-install,undercloud-post-install,\ overcloud-prep-config" $ bash ./quickstart.sh --tags $ansible_tags --teardown all \ --release master-tripleo-ci \ --nodes tripleo-quickstart/config/nodes/3ctlr_1comp.yml \ --config neutron/tools/tripleo/ovn.yml \ $VIRTHOSTNote

When deploying directly on

localhostuse the loopback address 127.0.0.2 as your $VIRTHOST. The loopback address 127.0.0.1 is reserved by ansible. Also make sure that 127.0.0.2 is accessible via public keys:$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keysNote

You can adjust RAM/VCPUs if you want by editing config/nodes/3ctlr_1comp.yml before running the above command. If you have enough memory stick to the defaults. We recommend using 8GB of RAM for the controller nodes.

When quickstart has finished you will have 5 VMs ready to be used, 1 for the undercloud (TripleO's node to deploy your openstack from), 3 VMs for controller nodes and 1 VM for the compute node.

Log in into the undercloud:

$ ssh -F ~/.quickstart/ssh.config.ansible undercloudPrepare overcloud container images:

[stack@undercloud ~]$ ./overcloud-prep-containers.shRun inside the undercloud:

[stack@undercloud ~]$ ./overcloud-deploy.shGrab a coffee, that may take around 1 hour (depending on your hardware).

If anything goes wrong, go to IRC on freenode, and ask on #oooq

Description of the environment

Once deployed, inside the undercloud root directory two files are present: stackrc and overcloudrc, which will let you connect to the APIs of the undercloud (managing the openstack node), and to the overcloud (where your instances would live).

We can find out the existing controller/computes this way:

[stack@undercloud ~]$ source stackrc

(undercloud) [stack@undercloud ~]$ openstack server list -c Name -c Networks -c Flavor

+-------------------------+------------------------+--------------+

| Name | Networks | Flavor |

+-------------------------+------------------------+--------------+

| overcloud-controller-1 | ctlplane=192.168.24.16 | oooq_control |

| overcloud-controller-0 | ctlplane=192.168.24.14 | oooq_control |

| overcloud-controller-2 | ctlplane=192.168.24.12 | oooq_control |

| overcloud-novacompute-0 | ctlplane=192.168.24.13 | oooq_compute |

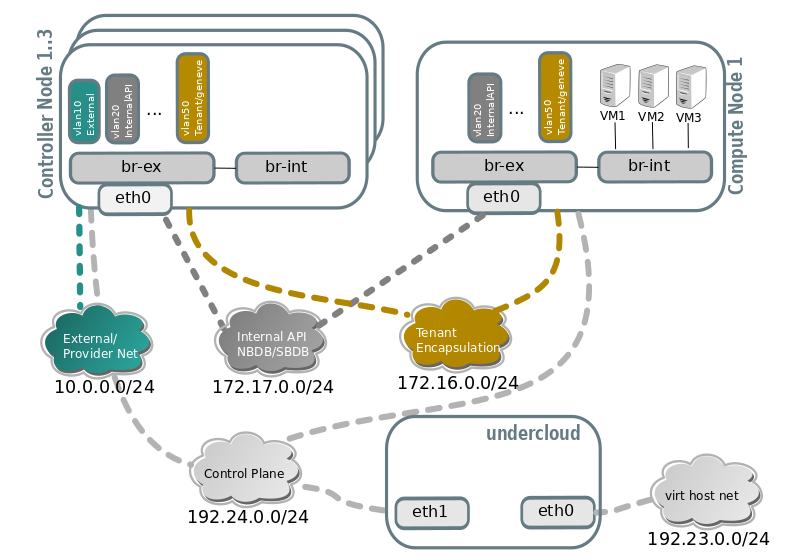

+-------------------------+------------------------+--------------+Network architecture of the environment

Connecting to one of the nodes via ssh

We can connect to the IP address in the openstack server list we showed before.

(undercloud) [stack@undercloud ~]$ ssh heat-admin@192.168.24.16

Last login: Wed Feb 21 14:11:40 2018 from 192.168.24.1

[heat-admin@overcloud-controller-1 ~]$ ps fax | grep ovn-controller

20422 ? S<s 30:40 ovn-controller unix:/var/run/openvswitch/db.sock -vconsole:emer -vsyslog:err -vfile:info --no-chdir --log-file=/var/log/openvswitch/ovn-controller.log --pidfile=/var/run/openvswitch/ovn-controller.pid --detach

[heat-admin@overcloud-controller-1 ~]$ sudo ovs-vsctl show

bb413f44-b74f-4678-8d68-a2c6de725c73

Bridge br-ex

fail_mode: standalone

...

Port "patch-provnet-84d63c87-aad1-43d0-bdc9-dca5145b6fe6-to-br-int"

Interface "patch-provnet-84d63c87-aad1-43d0-bdc9-dca5145b6fe6-to-br-int"

type: patch

options: {peer="patch-br-int-to-provnet-84d63c87-aad1-43d0-bdc9-dca5145b6fe6"}

Port "eth0"

Interface "eth0"

...

Bridge br-int

fail_mode: secure

Port "ovn-c8b85a-0"

Interface "ovn-c8b85a-0"

type: geneve

options: {csum="true", key=flow, remote_ip="172.16.0.17"}

Port "ovn-b5643d-0"

Interface "ovn-b5643d-0"

type: geneve

options: {csum="true", key=flow, remote_ip="172.16.0.14"}

Port "ovn-14d60a-0"

Interface "ovn-14d60a-0"

type: geneve

options: {csum="true", key=flow, remote_ip="172.16.0.12"}

Port "patch-br-int-to-provnet-84d63c87-aad1-43d0-bdc9-dca5145b6fe6"

Interface "patch-br-int-to-provnet-84d63c87-aad1-43d0-bdc9-dca5145b6fe6"

type: patch

options: {peer="patch-provnet-84d63c87-aad1-43d0-bdc9-dca5145b6fe6-to-br-int"}

Port br-int

Interface br-int

type: internalInitial resource creation

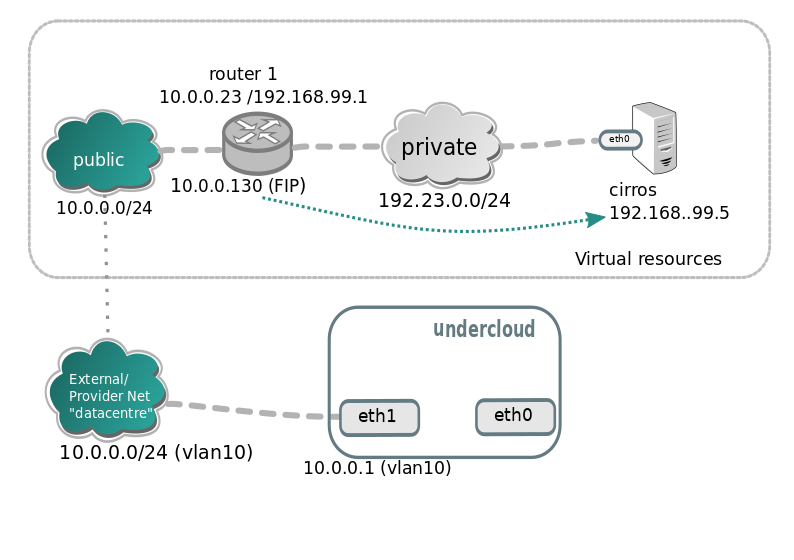

Well, now you have a virtual cloud with 3 controllers in HA, and one compute node, but no instances or routers running. We can give it a try and create a few resources:

You can use the following script to create the resources.

ssh -F ~ /.quickstart/ssh.config.ansible undercloud

source ~/overcloudrc

curl http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img \

> cirros-0.4.0-x86_64-disk.img

openstack image create "cirros" --file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare --public

openstack network create public --provider-physical-network datacentre \

--provider-network-type vlan \

--provider-segment 10 \

--external --share

openstack subnet create --network public public --subnet-range 10.0.0.0/24 \

--allocation-pool start=10.0.0.20,end=10.0.0.250 \

--dns-nameserver 8.8.8.8 --gateway 10.0.0.1 \

--no-dhcp

openstack network create private

openstack subnet create --network private private \

--subnet-range 192.168.99.0/24

openstack router create router1

openstack router set --external-gateway public router1

openstack router add subnet router1 private

openstack security group create test

openstack security group rule create --ingress --protocol tcp \

--dst-port 22 test

openstack security group rule create --ingress --protocol icmp test

openstack security group rule create --egress test

openstack flavor create m1.tiny --disk 1 --vcpus 1 --ram 64

PRIV_NET=$(openstack network show private -c id -f value)

openstack server create --flavor m1.tiny --image cirros \

--nic net-id=$PRIV_NET --security-group test \

--wait cirros

openstack floating ip create --floating-ip-address 10.0.0.130 public

openstack server add floating ip cirros 10.0.0.130Note

You can now log in into the instance if you want. In a CirrOS >0.4.0 image, the login account is cirros. The password is gocubsgo.

(overcloud) [stack@undercloud ~]$ ssh cirros@10.0.0.130

cirros@10.0.0.130's password:

$ ip a | grep eth0 -A 10

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1442 qdisc pfifo_fast qlen 1000

link/ether fa:16:3e:85:b4:66 brd ff:ff:ff:ff:ff:ff

inet 192.168.99.5/24 brd 192.168.99.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe85:b466/64 scope link

valid_lft forever preferred_lft forever

$ ping 10.0.0.1

PING 10.0.0.1 (10.0.0.1): 56 data bytes

64 bytes from 10.0.0.1: seq=0 ttl=63 time=2.145 ms

64 bytes from 10.0.0.1: seq=1 ttl=63 time=1.025 ms

64 bytes from 10.0.0.1: seq=2 ttl=63 time=0.836 ms

^C

--- 10.0.0.1 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.836/1.335/2.145 ms

$ ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8): 56 data bytes

64 bytes from 8.8.8.8: seq=0 ttl=52 time=3.943 ms

64 bytes from 8.8.8.8: seq=1 ttl=52 time=4.519 ms

64 bytes from 8.8.8.8: seq=2 ttl=52 time=3.778 ms

$ curl http://169.254.169.254/2009-04-04/meta-data/instance-id

i-00000002