The Storage Architecture Overview is revised to include the new diagrams and to use them to explain design considerations when implementing a production OpenStack environment. This patch includes the move of the storage diagrams to the new location. Change-Id: Ie63466b16e2d61b3d82f73462dbf709ae04e4994

8.8 KiB

Storage architecture

Introduction

OpenStack has multiple storage realms to consider:

- Block Storage (cinder)

- Object Storage (swift)

- Image storage (glance)

- Ephemeral storage (nova)

Block Storage (cinder)

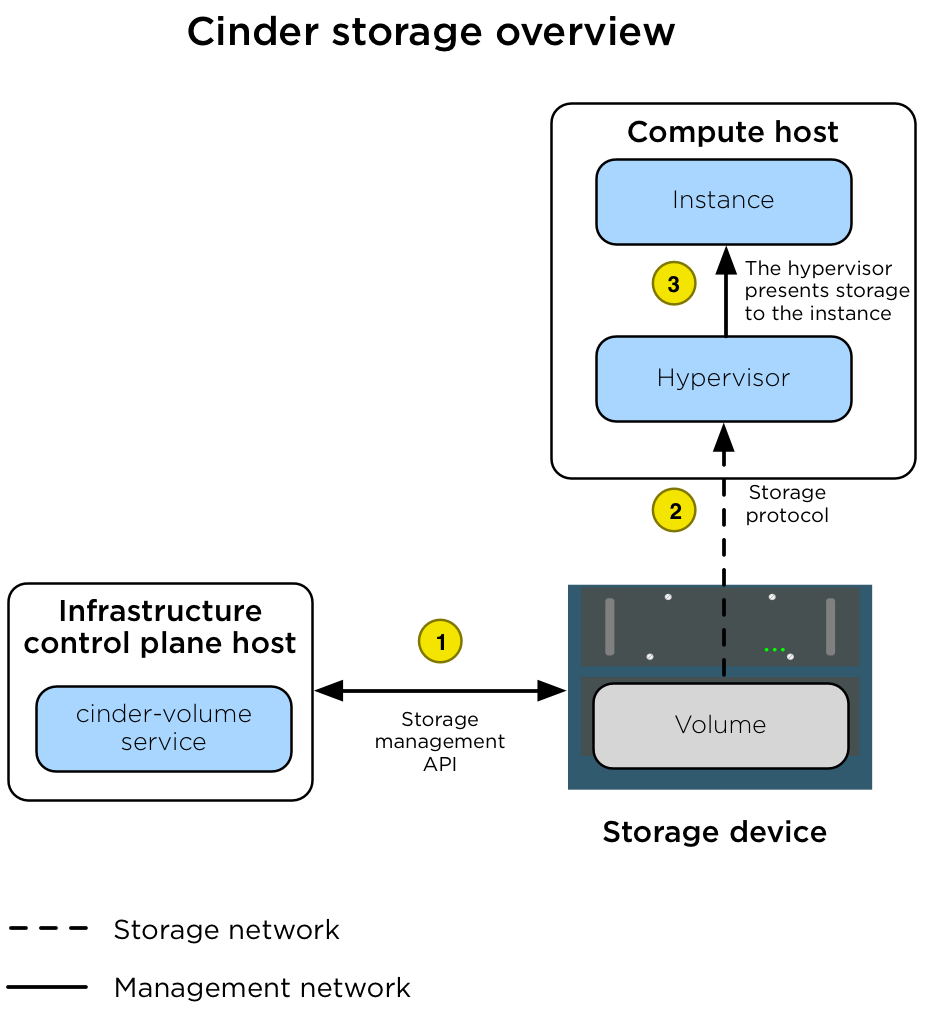

The Block Storage (cinder) service manages volumes on storage devices

in an environment. For a production deployment, this is a device that

presents storage via a storage protocol (for example: NFS, iSCSI, Ceph

RBD) to a storage network (br-storage) and a storage

management API to the management (br-mgmt) network.

Instances are connected to the volumes via the storage network by the

hypervisor on the Compute host. The following diagram illustrates how

Block Storage is connected to instances.

| 1. | The creation of a volume is executed by the assigned

cinder-volume service using the appropriate cinder

driver. This is done using an API which is presented to the

management network. |

| 2. | After the volume is created, the nova-compute service

connects the Compute host hypervisor to the volume via the storage

network. |

| 3. | After the hypervisor is connected to the volume, it presents the volume as a local hardware device to the instance. |

Important

The LVMVolumeDriver

is designed as a reference driver implementation which we do not

recommend for production usage. The LVM storage back-end is a single

server solution which provides no high availability options. If the

server becomes unavailable, then all volumes managed by the

cinder-volume service running on that server become

unavailable. Upgrading the operating system packages (for example:

kernel, iscsi) on the server will cause storage connectivity outages due

to the iscsi service (or the host) restarting.

Due to a limitation

with container iSCSI connectivity, you must deploy the

cinder-volume service directly on a physical host (not into

a container) when using storage back-ends which connect via iSCSI. This

includes the LVMVolumeDriver

and many of the drivers for commercial storage devices.

Note

The

cinder-volumeservice does not run in a highly available configuration. When thecinder-volumeservice is configured to manage volumes on the same back-end from multiple hosts/containers, one service is scheduled to manage the life-cycle of the volume until an alternative service is assigned to do so. This assignment may be done through by using the cinder-manage CLI tool. This may change in the future if the cinder volume active-active support spec is implemented.

Object Storage (swift)

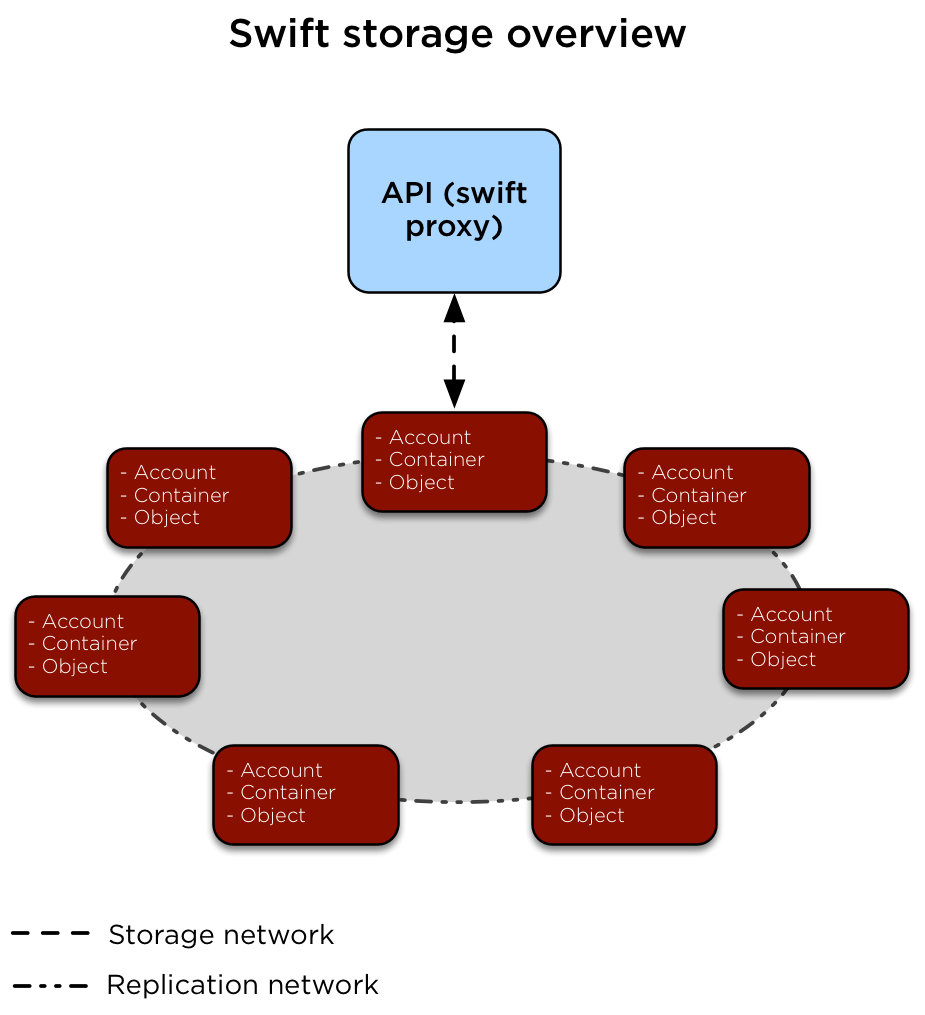

The Object Storage (swift) service implements a highly available, distributed, eventually consistent object/blob store which is accessible via HTTP/HTTPS.

The following diagram illustrates how data is accessed and replicated.

Image storage (glance)

The Image service (glance) may be configured to store images on a variety of storage back-ends supported by the glance_store drivers.

Important

When using the File System store, glance has no mechanism of its own

to replicate the image between glance hosts. We recommend using a shared

storage back-end (via a file system mount) to ensure that all

glance-api services have access to all images. This

prevents the unfortunate situation of losing access to images when a

control plane host is lost.

The following diagram illustrates the interactions between the glance

service, the storage device, and the nova-compute service

when an instance is created.

| 1. | When a client requests an image, the glance-api service

accesses the appropriate store on the storage device over the storage

(br-storage) network and pulls it into its cache. When the

same image is requested again, it is given to the client directly from

the cache instead of re-requesting it from the storage device. |

| 2. | When an instance is scheduled for creation on a Compute host, the

nova-compute service requests the image from the

glance-api service over the management

(br-mgmt) network. |

| 3. | After the image is retrieved, the nova-compute service

stores the image in its own image cache. When another instance is

created with the same image, the image is retrieved from the local base

image cache instead of re-requesting it from the glance-api

service. |

Ephemeral storage (nova)

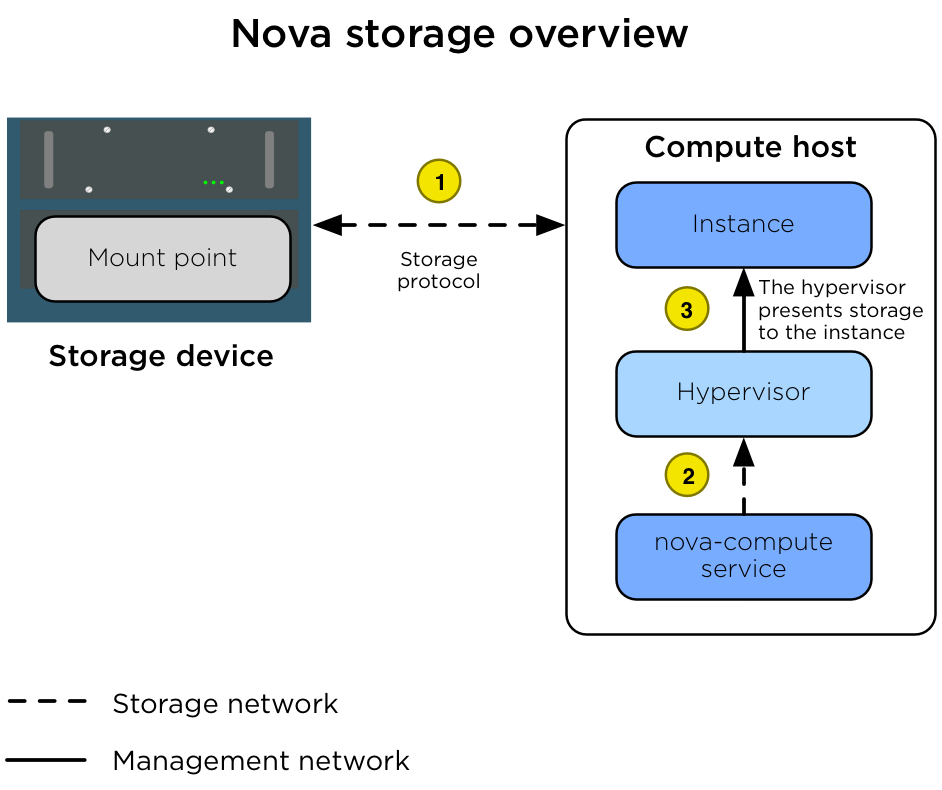

When the flavors in the Compute service are configured to provide

instances with root or ephemeral disks, the nova-compute

service manages these allocations using its ephemeral disk storage

location.

In many environments, the ephemeral disks are stored on the Compute host's local disks, but for production environments we recommended that the Compute hosts are configured to use a shared storage subsystem instead.

Making use of a shared storage subsystem allows the use of quick live instance migration between Compute hosts. This is useful when the administrator needs to perform maintenance on the Compute host and wants to evacuate it. Using a shared storage subsystem also allows the recovery of instances when a Compute host goes offline. The administrator is able to evacuate the instance to another Compute host and boot it up again.

The following diagram illustrates the interactions between the storage device, the Compute host, the hypervisor and the instance.

| 1. | The Compute host is configured with access to the storage device.

The Compute host accesses the storage space via the storage network

(br-storage) using a storage protocol (for example: NFS,

iSCSI, Ceph RBD). |

| 2. | The nova-compute service configures the hypervisor to

present the allocated instance disk as a device to the instance. |

| 3. | The hypervisor presents the disk as a device to the instance. |